Add buffer support for payloadSizeLimiter #197

No reviewers

Labels

No labels

P0

P1

P2

P3

good first issue

pool

Infrastructure

blocked

bug

config

discussion

documentation

duplicate

enhancement

go

help wanted

internal

invalid

kludge

observability

perfomance

question

refactoring

wontfix

No milestone

No project

No assignees

6 participants

Notifications

Due date

No due date set.

Dependencies

No dependencies set.

Reference: TrueCloudLab/frostfs-sdk-go#197

Loading…

Add table

Reference in a new issue

No description provided.

Delete branch "achuprov/frostfs-sdk-go:buff_pool"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Close: #155

Example:

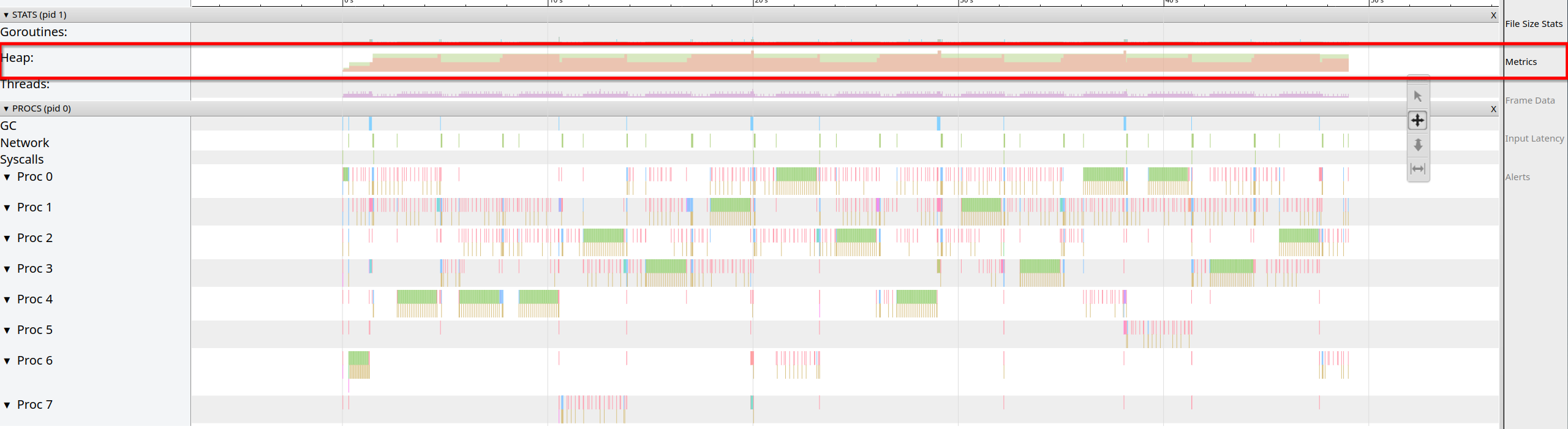

./frostfs-cli object put -r s01.frostfs.devenv:8080 --config conf.yml --file 1gb --cid cid --prepare-locallyBefore:

Benchmark

goos: linux

goarch: amd64

pkg: git.frostfs.info/TrueCloudLab/frostfs-sdk-go/object/transformer

cpu: 11th Gen Intel(R) Core(TM) i5-1135G7 @ 2.40GHz

After:

Benchmark

goos: linux

goarch: amd64

pkg: git.frostfs.info/TrueCloudLab/frostfs-sdk-go/object/transformer

cpu: 11th Gen Intel(R) Core(TM) i5-1135G7 @ 2.40GHz

Signed-off-by: Alexander Chuprov a.chuprov@yadro.com

[#155] sdk-go: Add buffer support for payloadSizeLimiterto Add buffer support for payloadSizeLimiterefe8d55ce4tod9d482c6b6@ -12,9 +12,12 @@ import (func (c *Client) objectPutInitTransformer(prm PrmObjectPutInit) (*objectWriterTransformer, error) {var w objectWriterTransformerpool := transformer.NewBytePool(prm.MaxSize)I prefer to add pool optional. If it is possible, add so please.

@ -0,0 +26,4 @@}func (bsp *BytePool) Put(s []byte) {if cap(s) < int(bsp.size) {I think It must be

if cap(s) > int(bsp.size)@ -0,0 +9,4 @@size uint64}func NewByteSlicePool(initialCapacity uint64) *BytePool {We have such implementation already: https://git.frostfs.info/TrueCloudLab/frostfs-api-go/src/branch/master/util/signature/buffer.go

d9d482c6b6to14a056fa02@ -232,4 +228,0 @@},key: v2acl.FilterObjectType,value: "STORAGE_GROUP",},STORAGE_GROUP has been removed in TrueCloudLab/frostfs-api#32

@ -199,0 +212,4 @@if s.Pool != nil {var buffer buffPool.Bufferbuffer.Data = s.payloaddefer s.Pool.Put(&buffer)Why do we use

deferhere?14a056fa02to7606ce7f87LGTM

Very nice

@ -141,0 +144,4 @@s.payload = make([]byte, 0, payloadSize)} else {if payloadSize == 0 {payloadSize = s.MaxSizeSo we will allocate 64 MiB even for 1KiB object?

There is a benchmark in the transformer package btw, could be useful.

@achuprov the question is still valid

fixed

@ -199,0 +212,4 @@if s.Pool != nil {var buffer buffPool.Bufferbuffer.Data = s.payloads.Pool.Put(&buffer)If it is too big, it will be dropped, because each buffer pool has some max size for slices, right?

Yes

if cap(buf.Data) > int(pool.poolSliceSize) {7606ce7f87tofb97eb1106Previously, the buffer would return the

transformerto the pool after being used. However, this buffer could still be referenced by anobject, potentially leading to data corruption. Now, the buffer is returned to the pool by theobjectitself.Fixed handling of small objects

Example of usage: TrueCloudLab/frostfs-node#939

@ -37,2 +38,4 @@Key *ecdsa.PrivateKey//Optional. It makes sense to pass this if the file is large.Maybe it's better to change

if the file is large->if the object is large?We write that it is optional, but do not say what it is and why we may need this.

Can we extend the description and use appropriate godoc format? (

// Pool is ...)Also, other optional fields do not have comment, not sure we need it.

Fixed

@ -141,0 +149,4 @@buffer := s.Pool.Get(uint32(payloadSize))s.payload = buffer.Datas.payload = s.payload[:0]Can we just write

?

Fixed

@ -110,6 +112,8 @@ func (it *internalTarget) tryPutSingle(ctx context.Context, o *object.Object) (b}if err == nil {it.returnBuffPool(o.Payload())We must put to pool only something we have taken from it.

Also, can we panic if the pool is missing?

Fixed

@ -21,3 +22,3 @@tt := new(testTarget)target, pk := newPayloadSizeLimiter(maxSize, 0, func() ObjectWriter { return tt })var contextTest benchContextIt is optional parameter, should not affect correctness, let's write a separate test if needed.

It is optional parameter, should not affect correctness, let's write a separate test if needed.

@ -154,3 +159,3 @@b.ResetTimer()for i := 0; i < b.N; i++ {f, _ := newPayloadSizeLimiter(maxSize, uint64(sizeHint), func() ObjectWriter { return benchTarget{} })var context benchContextI would rather not use pool in benchmarks, or write a separate one.

Removed the use of pool in benchmark.

fb97eb1106to2bcd4e9e522bcd4e9e52tob7bf231269b7bf231269to74f09de408@ -296,1 +305,4 @@func (s *payloadSizeLimiter) writeHashes(chunk []byte) error {// The buffer pool requires that the capacity must be <= MaxSize.// Using append to increase size could lead to cap > MaxSize and len < MaxSize.if cap(s.payload) < len(s.payload)+len(chunk) {It is not a problem worth solving, it is rare and the code with calculations is quite complicated, while the benefits are not obvious. Consider a common case:

MaxSizeis 128 MiBAgreed, I have removed this optimization.

74f09de408to4695c32c6f4695c32c6fto1af9b6d18b