Index attributes for non-S3 containers #1412

No reviewers

TrueCloudLab/storage-core-developers

Labels

No labels

P0

P1

P2

P3

badger

frostfs-adm

frostfs-cli

frostfs-ir

frostfs-lens

frostfs-node

good first issue

triage

Infrastructure

blocked

bug

config

discussion

documentation

duplicate

enhancement

go

help wanted

internal

invalid

kludge

observability

perfomance

question

refactoring

wontfix

No milestone

No project

No assignees

2 participants

Notifications

Due date

No due date set.

Dependencies

No dependencies set.

Reference: TrueCloudLab/frostfs-node#1412

Loading…

Add table

Reference in a new issue

No description provided.

Delete branch "dstepanov-yadro/frostfs-node:fix/metabase_index_some_attr"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Changes:

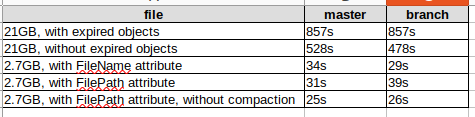

metabase.Putstores indexes forFilePathandS3-Access-Box-CRDT-Nameuser attributes for non S3 containers (containers with.s3-location-constraintattribute assumed as S3 containers)metabase.Selectuses those indexes for select for non S3 containersfrostfs-adm metabase upgradeconnects to blockchain to check container type on metabase upgradefrostfs-adm metabase upgradeworks now only with config files, no--pathargument could be specified as it requires also morph connection, which leads to a lot of arguments, and this is hard to maintainsupport/v0.38branch) a lot of junk could be stored in metabasemetabase.Deletedeletes user attribute containers if they are emptyPerf tests:

About last 2 results: with FilePath attribute size of metabase is larger, so compaction performs longer

2e91ec7e9dtob2d143aac6b2d143aac6toa9bf076a7ea9bf076a7eto0c014166e6@ -16,4 +22,3 @@)const (pathFlag = "path"Now metabase upgrade requires morph connection to resolve container. It is required to specify a lot of flags/args to pass required morph parameters. So now for metabase paths and morph endpoints only config is used.

0c014166e6to896b667d83896b667d83to002b2d1cc3002b2d1cc3todea227c872dea227c872to4716edc6a4@ -36,0 +41,4 @@}}func testSelect2(t *testing.T, db *meta.DB, cnr cid.ID, fs objectSDK.SearchFilters, useAttrIndex bool, exp ...oid.Address) {No imagination, sorry.

@ -418,0 +439,4 @@return nil}if err := bkt.DeleteBucket(item.key); err != nil {Differs from v2: bucket is now deleted if there are no more entries in it.

@ -384,0 +423,4 @@// user specified attributesfor i := range attrs {if _, index := indexedAttributes[attrs[i].Key()]; !index {continueDeleterelated: since the version of the metabase has been changed, there should be no indexes for other attributes.@ -259,6 +279,81 @@ func bucketNamesForType(cnr cid.ID, mType objectSDK.SearchMatchType, typeVal strreturn}func (db *DB) selectFromFKBT(The same code as from

support/v0.422650d85186toeac1f93c97eac1f93c97to6b5b44dc986b5b44dc98to4519846f99WIP: Index attributes for non-S3 containersto Index attributes for non-S3 containersHonestly, I do not like the idea of carrying some bool flag together with the object through the whole data-path, the metabase is leaking all the way up to storage service.

I'd like to discuss possible alternatives.

I would rather have

pkg/core/object.ObjectWIthIndexstruct where such info could be stored (where we can convert between object and objectwithindex, but the latter always contains info about s3, being impossible to construct otherwise).Another option is to have

PutandPutIndexed(obj)(the latter may contain a list of attributes), leaving all the branching as high as possible.My last suggestion is to have interface in the metabase itself. The dificulty here is to ensure we make no network requests during a transaction. It should be possible with some sort of pinning in our LRU cache and ensuring caller always fetches the container. This is the smallest solution in terms of files changed.

If there is no favourable solution, let's stick with the one in the PR.

My another concern is

Select-- it seems unnecessary to provide an option there, because on Put we can save all necessary info about the container in the metabase. And if there are no objects, we can just return an empty result anyway. Am I missing something?@ -384,0 +422,4 @@// user specified attributesfor i := range attrs {if _, index := indexedAttributes[attrs[i].Key()]; !index {use

IsAttributeIndexable?fixed

@ -218,7 +223,13 @@ func (db *DB) selectFastFilter(selectAllFromBucket(tx, primaryBucketName(cnr, bucketName), to, fNum)selectAllFromBucket(tx, tombstoneBucketName(cnr, bucketName), to, fNum)selectAllFromBucket(tx, bucketNameLockers(cnr, bucketName), to, fNum)default:How come we had no code here before, where is the attribute search?

In slow filters

@ -262,0 +288,4 @@) { //matchFunc, ok := db.matchers[f.Operation()]if !ok {db.log.Debug(logs.MetabaseMissingMatcher, zap.Uint32("operation", uint32(f.Operation())))Doesn't

f.Operation()have a string method?fixed

@ -512,3 +607,3 @@res.fastFilters = append(res.fastFilters, filters[i])default:res.slowFilters = append(res.slowFilters, filters[i])if _, indexed := indexedAttributes[filters[i].Header()]; useAttributeIndex && indexed {use

IsIndexedAttribute?fixed

@ -170,6 +169,28 @@ func smallBucketName(cnr cid.ID, key []byte) []byte {return bucketName(cnr, smallPrefix, key)}// attributeBucketName returns <CID>_attr_<attributeKey>.This comment seems wrong.

fixed

@ -275,6 +275,27 @@ func (s *Shard) refillObject(ctx context.Context, data []byte, addr oid.Address,return nil}var hasIndexableAttribute boolHow about helpers from

slicespackage?hasIndexableAttribute = slices.IndexFunc(obj.Attributes(), func(a) { meta.IsAttributeIndexable(a.Key()) }) > 0fixed

@ -26,3 +27,3 @@// SetContainerID is a Select option to set the container id to search in.func (p *SelectPrm) SetContainerID(cnr cid.ID) {func (p *SelectPrm) SetContainerID(cnr cid.ID, isS3Container bool) {It is a bit strange to see

S3on the shard level, how aboutisIndexedContainer?ok, fixed

@ -28,3 +29,3 @@var errInvalidECPlacement = errors.New("invalid EC placement: EC placement must have one placement vector with at least one node")func (p *Policer) processECContainerObject(ctx context.Context, objInfo objectcore.Info, policy netmap.PlacementPolicy) error {func (p *Policer) processECContainerObject(ctx context.Context, objInfo objectcore.Info, policy netmap.PlacementPolicy, cnr containerSDK.Container) error {If we pass the container, we can omit placement policy to reduce argument number and avoid duplication:

policy == cnr.PlacementPolicy().fixed

@ -453,3 +454,3 @@}func (r *testReplicator) HandleLocalPutTask(ctx context.Context, task replicator.Task) {func (r *testReplicator) HandleLocalPutTask(ctx context.Context, task replicator.Task, cnr containerSDK.Container) {Why have you decided not to make it a part of

replicator.Task?fixed

I don't think that

Searchis the large used RPC call, so having one more network request about container is not so bad in comparison with storing addition info for each container in metabase, which is used for every user request.4519846f99to91a948073391a9480733to05c1fe8d1a05c1fe8d1ato1b9f5fb7eb1b9f5fb7ebtod491494295@ -262,0 +288,4 @@) { //matchFunc, ok := db.matchers[f.Operation()]if !ok {db.log.Debug(logs.MetabaseMissingMatcher, zap.Stringer("operation", f.Operation()))Oh, we should not log inside the transaction, it can easily hang the db.

Do we do it anywhere else currently?

If no, let's remove.

Done.

d491494295to44f7bccb1e@ -1417,0 +1423,4 @@return container.NewInfoProvider(func() (container.Source, error) {// threadsafe: called on init or on sighup when morph initializedif c.cfgMorph.client == nil {initMorphComponents(ctx, c)Do we call

containerClient.NewFromMorphon each invocation instead of once?No:

container.InfoProviderusessync.Onceto createcontainer.Source@ -0,0 +36,4 @@func NewInfoProvider(sourceFactory func() (Source, error)) InfoProvider {return &infoProvider{mtx: &sync.RWMutex{},cache: make(map[cid.ID]infoValue),Does this cache have unrestricted size?

Yes: for 10 000 containers this requires only (32 bytes + 4 bytes) * 10 000 = 360 KB of memory, so cache capacity leads only for complexity. Adding TTL is also redundant, because the only case for TTL is if container was deleted, but this leads only to some extra IO operations, not to errors.

44f7bccb1etoc065d55ca3