Speed up metabase refill #1027

No reviewers

Labels

No labels

P0

P1

P2

P3

badger

frostfs-adm

frostfs-cli

frostfs-ir

frostfs-lens

frostfs-node

good first issue

triage

Infrastructure

blocked

bug

config

discussion

documentation

duplicate

enhancement

go

help wanted

internal

invalid

kludge

observability

perfomance

question

refactoring

wontfix

No milestone

No project

No assignees

4 participants

Notifications

Due date

No due date set.

Dependencies

No dependencies set.

Reference: TrueCloudLab/frostfs-node#1027

Loading…

Add table

Reference in a new issue

No description provided.

Delete branch "dstepanov-yadro/frostfs-node:feat/resync_speedup"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Relates #1024

Relates #1029

According to the benchmark results, performance did not increase after the number of concurrently processed objects was 250. I decided to set the default value to 500 so that metabase refill would occur faster on more productive systems. The difference between 250 and 500 goroutines does not look so significant.

Another approach considered is to first read objects batch, and then simultaneously send them for writing to the metabase. But this did not lead to a noticeable increase in productivity.

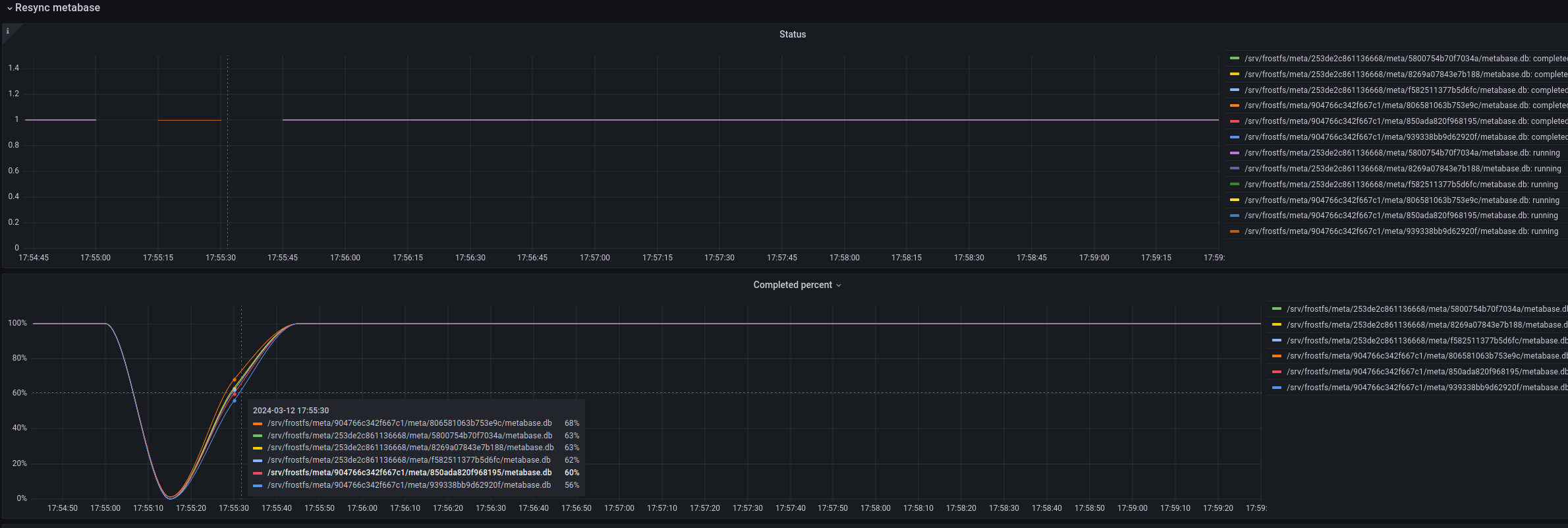

Also refill metabase metrics were added.

There are benchmark results:

master

RefillMetabaseWorkersCountDefault = 10

RefillMetabaseWorkersCountDefault = 100

RefillMetabaseWorkersCountDefault = 250

RefillMetabaseWorkersCountDefault = 500

RefillMetabaseWorkersCountDefault = 1000

RefillMetabaseWorkersCountDefault = 2000

Metrics dashboards:

@ -160,2 +160,3 @@if !prm.withoutData {elem.data = vdata := make([]byte, 0, len(v))elem.data = append(data, v...)vis only valid fortxlifetime. So to usevin separate goroutine it is required to copy it.bytes.Clone()?bytes.Clone()doesn't preallocate enough capacity.It does, it may overallocate though, but it is the solution from the stdlib.

Don't mind to leave it as is.

fixed

8727d55371to7e1b5b8c377e1b5b8c37to4c479b861dd92a98923ato0d91716e810d91716e81to78a96c8182387ec06718toa122ef3cd9a122ef3cd9tod4e315aba0@ -187,2 +202,3 @@obj := objectSDK.New()withDiskUsage := trueduCtx, cancel := context.WithTimeout(ctx, 10*time.Minute) // if compute disk usage takes too long, then just skip this stepWhy not to move timeout in config?

can't imagine the situation when we will change this.

d4e315aba0todfc2fe2ebfLGTM

Doesn't this close #1024?

It does. But I prefer to close tasks manually with comments.

dfc2fe2ebfto182ec28f1c@ -18,0 +49,4 @@for i := range b.storage {path := b.storage[i].Storage.Path()eg.Go(func() error {return filepath.WalkDir(path, func(path string, d fs.DirEntry, _ error) error {This won't work for blobovniczas:

This becomes worse the more small objects we have.

Have you considered using naive but correct implementation? (traverse blobovniczas, maybe even count objects, not their size).

That's why the method is called

DiskUsage, notDataSize.Every blobovnicza stores data size, but to get this value it is required to open/close db. As it is used only for metrics, I think it is enough to calculate only disk size.

But then metrics won't reflect what we deem them reflect (progress of operation).

Agree, fixed.

Now object count used for metrics, data size is hard to compute if compression is enabled.

@ -187,2 +202,3 @@obj := objectSDK.New()withDiskUsage := trueduCtx, cancel := context.WithTimeout(ctx, 10*time.Minute) // if compute disk usage takes too long, then just skip this stepIt looks strange to me: we can wait for 10 minutes and still do not receive any metrics.

Ironically situations when disk usage calculation takes too long are also likely the ones where having metrics provides a huge QOL improvement. metabase resync duration has the order of tens of hours for big disks, 10 minute is nothing in comparison.

So why don't we allow ourselves hang here?

Fixed.

182ec28f1cto0306fdc8180306fdc818to23fdee2e9723fdee2e97tob66ea2c713Speed up metabase refillto WIP: Speed up metabase refillb66ea2c713to6e038936cf6e038936cfto1b17258c04WIP: Speed up metabase refillto Speed up metabase refill